The OpenAI Keynote

OpenAI’s developer keynote was exciting, both because AI was exciting, and because OpenAI has the potential to be a meaningful consumer tech company.

In 2013, when I started Stratechery, there was no bigger event than the launch of the new iPhone; its only rival was Google I/O, which is when the newest version of Android was unveiled (hardware always breaks the tie, including with Apple’s iOS introductions at WWDC). It wasn’t just that smartphones were relatively new and still adding critical features, but that the strategic decisions and ultimate fates of the platforms were still an open question. More than that, the entire future of the tech industry was clearly tied up in said platforms and their corresponding operating systems and devices; how could keynotes not be a big deal?

Fast forward a decade and the tech keynote has diminished in importance and, in the case of Apple, disappeared completely, replaced by a pre-recorded marketing video. I want to be mad about it, but it makes sense: an iPhone introduction has been diminished not by Apple’s presentation, but rather Apple’s presentations reflect the reality that the most important questions around an iPhone are about marketing tactics. How do you segment the iPhone line? How do you price? What sort of brand affinity are you seeking to build? There, I just summarized the iPhone 15 introduction, and the reality that the smartphone era — The End of the Beginning — is over as far as strategic considerations are concerned. iOS and Android are a given, but what is next and yet unknown?

The answer is, clearly, AI, but even there, the energy seems muted: Apple hasn’t talked about generative AI other than to assure investors on earnings calls that they are working on it; Google I/O was of course about AI, but mostly in the context of Google’s own products — few of which have actually shipped — and my Article at the time was quickly side-tracked into philosophical discussions about both the nature of AI innovation (sustaining versus disruptive), the question of tech revolution versus alignment, and a preview of the coming battles of regulation that arrived with last week’s Executive Order on AI.

Meta’s Connect keynote was much more interesting: not only were AI characters being added to Meta’s social networks, but next year you will be able to take AI with you via Smart Glasses (I told you hardware was interesting!). Nothing, though, seemed to match the energy around yesterday’s OpenAI developer conference, their first ever: there is nothing more interesting in tech than a consumer product with product-market fit. And that, for me, is enough to bring back an old Stratechery standby: the keynote day-after.

Keynote Metaphysics and GPT-4 Turbo

This was, first and foremost, a really good keynote, in the keynote-as-artifact sense. CEO Sam Altman, in a humorous exchange with Microsoft CEO Satya Nadella, promised, “I won’t take too much of your time”; never mind that Nadella was presumably in San Francisco just for this event: in this case he stood in for the audience who witnessed a presentation that was tight, with content that was interesting, leaving them with a desire to learn more.

Altman himself had a good stage presence, with the sort of nervous energy that is only present in a live keynote; the fact he never seemed to know which side of the stage a fellow presenter was coming from was humanizing. Meanwhile, the live demos not only went off without a hitch, but leveraged the fact that they were live: in one instance a presenter instructed a GPT she created to text Altman; he held up his phone to show he got the message. In another a GPT randomly selected five members of the audience to receive $500 in OpenAI API credits, only to then extend it to everyone.

New products and features, meanwhile, were available “today”, not weeks or months in the future, as is increasingly the case for events like I/O or WWDC; everything combined to give a palpable sense of progress and excitement, which, when it comes to AI, is mostly true.

GPT-4 Turbo is an excellent example of what I mean by “mostly”. The API consists of six new features:

- Increased context length

- More control, specifically in terms of model inputs and outputs

- Better knowledge, which both means updating the cut-off date for knowledge about the world to April 2023 and providing the ability for developers to easily add their own knowledge base

- New modalities, as DALL-E 3, Vision, and TTS (text-to-speech) will all be included in the API, with a new version of Whisper speech recognition coming.

- Customization, including fine-tuning, and custom models (which, Altman warned, won’t be cheap)

- Higher rate limits

This is, to be clear, still the same foundational model (GPT-4); these features just make the API more usable, both in terms of features and also performance. It also speaks to how OpenAI is becoming more of a product company, with iterative enhancements of its core functionality. Yes, the mission still remains AGI (artificial general intelligence), and the core scientific team is almost certainly working on GPT-5, but Altman and team aren’t just tossing models over the wall for the rest of the industry to figure out.

Price and Microsoft

The next “feature” was tied into the GPT-4 Turbo introduction: the API is getting cheaper (3x cheaper for input tokens, and 2x cheaper for output tokens). Unsurprisingly this announcement elicited cheers from the developers in attendance; what I cheered as an analyst was Altman’s clear articulation of the company’s priorities: lower price first, speed later. You can certainly debate whether that is the right set of priorities (I think it is, because the biggest need now is for increased experimentation, not optimization), but what I appreciated was the clarity.

It’s also appropriate that the segment after that was the brief “interview” with Nadella: OpenAI’s pricing is ultimately a function of Microsoft’s ability to build the infrastructure to support that pricing. Nadella actually explained how Microsoft is accomplishing that on the company’s most recent earnings call:

It is true that the approach we have taken is a full stack approach all the way from whether it’s ChatGPT or Bing Chat or all our Copilots, all share the same model. So in some sense, one of the things that we do have is very, very high leverage of the one model that we used, which we trained, and then the one model that we are doing inferencing at scale. And that advantage sort of trickles down all the way to both utilization internally, utilization of third parties, and also over time, you can see the sort of stack optimization all the way to the silicon, because the abstraction layer to which the developers are riding is much higher up than low-level kernels, if you will.

So, therefore, I think there is a fundamental approach we took, which was a technical approach of saying we’ll have Copilots and Copilot stack all available. That doesn’t mean we don’t have people doing training for open source models or proprietary models. We also have a bunch of open source models. We have a bunch of fine-tuning happening, a bunch of RLHF happening. So there’s all kinds of ways people use it. But the thing is, we have scale leverage of one large model that was trained and one large model that’s being used for inference across all our first-party SaaS apps, as well as our API in our Azure AI service…

The lesson learned from the cloud side is — we’re not running a conglomerate of different businesses, it’s all one tech stack up and down Microsoft’s portfolio, and that, I think, is going to be very important because that discipline, given what the spend like — it will look like for this AI transition any business that’s not disciplined about their capital spend accruing across all their businesses could run into trouble.

The fact that Microsoft is benefiting from OpenAI is obvious; what this makes clear is that OpenAI uniquely benefits from Microsoft as well, in a way they would not from another cloud provider: because Microsoft is also a product company investing in the infrastructure to run OpenAI’s models for said products, it can afford to optimize and invest ahead of usage in a way that OpenAI alone, even with the support of another cloud provider, could not. In this case that is paying off in developers needing to pay less, or, ideally, have more latitude to discover use cases that result in them paying far more because usage is exploding.

GPTs and Computers

I mentioned GPTs before; you were probably confused, because this is a name that is either brilliant or a total disaster. Of course you could have said the same about ChatGPT: massive consumer uptake has a way of making arguably poor choices great ones in retrospect, and I can see why OpenAI is seeking to basically brand “GPT” — generative pre-trained transformer — as an OpenAI chatbot.

Regardless, this was how Altman explains GPTs:

GPTs are tailored version of ChatGPT for a specific purpose. You can build a GPT — a customized version of ChatGPT — for almost anything, with instructions, expanded knowledge, and actions, and then you can publish it for others to use. And because they combine instructions, expanded knowledge, and actions, they can be more helpful to you. They can work better in many contexts, and they can give you better control. They’ll make it easier for you accomplish all sorts of tasks or just have more fun, and you’ll be able to use them right within ChatGPT. You can, in effect, program a GPT, with language, just by talking to it. It’s easy to customize the behavior so that it fits what you want. This makes building them very accessible, and it gives agency to everyone.

We’re going to show you what GPTs are, how to use them, how to build them, and then we’re going to talk about how they’ll be distributed and discovered. And then after that, for developers, we’re going to show you how to build these agent-like experiences into your own apps.

Altman’s examples included a lesson-planning GPT from Code.org and a natural language vision design GPT from Canva. As Altman noted, the second example might have seemed familiar: Canva had a plugin for ChatGPT, and Altman explained that “we’ve evolved our plugins to be custom actions for GPTs.”

I found the plugin concept fascinating and a useful way to understand both the capabilities and limits of large language models; I wrote in ChatGPT Gets a Computer:

The implication of this approach is that computers are deterministic: if circuit X is open, then the proposition represented by X is true; 1 plus 1 is always 2; clicking “back” on your browser will exit this page. There are, of course, a huge number of abstractions and massive amounts of logic between an individual transistor and any action we might take with a computer — and an effectively infinite number of places for bugs — but the appropriate mental model for a computer is that they do exactly what they are told (indeed, a bug is not the computer making a mistake, but rather a manifestation of the programmer telling the computer to do the wrong thing)…

Large language models, though, with their probabilistic approach, are in many domains shockingly intuitive, and yet can hallucinate and are downright terrible at math; that is why the most compelling plug-in OpenAI launched was from Wolfram|Alpha. Stephen Wolfram explained:

For decades there’s been a dichotomy in thinking about AI between “statistical approaches” of the kind ChatGPT uses, and “symbolic approaches” that are in effect the starting point for Wolfram|Alpha. But now—thanks to the success of ChatGPT��—as well as all the work we’ve done in making Wolfram|Alpha understand natural language—there’s finally the opportunity to combine these to make something much stronger than either could ever achieve on their own.

That is the exact combination that happened, which led to the title of that Article:

The fact this works so well is itself a testament to what Assistant AI’s are, and are not: they are not computing as we have previously understood it; they are shockingly human in their way of “thinking” and communicating. And frankly, I would have had a hard time solving those three questions as well — that’s what computers are for! And now ChatGPT has a computer of its own.

I still think the concept was incredibly elegant, but there was just one problem: the user interface was terrible. You had to get a plugin from the “marketplace”, then pre-select it before you began a conversation, and only then would you get workable results after a too-long process where ChatGPT negotiated with the plugin provider in question on the answer.

This new model somewhat alleviates the problem: now, instead of having to select the correct plug-in (and thus restart your chat), you simply go directly to the GPT in question. In other words, if I want to create a poster, I don’t enable the Canva plugin in ChatGPT, I go to Canva GPT in the sidebar. Notice that this doesn’t actually solve the problem of needing to have selected the right tool; what it does do is make the choice more apparent to the user at a more appropriate stage in the process, and that’s no small thing. I also suspect that GPTs will be much faster than plug-ins, given they are integrated from the get-go. Finally, standalone GPTs are a much better fit with the store model that OpenAI is trying to develop.

Still, there is a better way: Altman demoed it.

ChatGPT and the Universal Interface

Before Altman introduced the aforementioned GPTs he talked about improvements to ChatGPT:

Even though this is a developer conference, we can’t help resist making some improvements to ChatGPT. A small one, ChatGPT now uses GPT-4 Turbo, with all of the latest improvements, including the latest cut-off, which we’ll continue to update — that’s all live today. It can now browse the web when it needs to, write and run code, analyze data, generate images, and much more, and we heard your feedback that that model picker was extremely annoying: that is gone, starting today. You will not have to click around a drop-down menu. All of this will just work together. ChatGPT will just know what to use and when you need it. But that’s not the main thing.

You may wonder why I put this section after GPTs, given they were, according to Altman, the main thing: it’s because I think this feature enhancement is actually much more important. As I just noted, GPTs are a somewhat better UI on an elegant plugin concept, in which a probabilisitic large language model gets access to a deterministic computer. The best UI, though, is no UI at all, or rather, just one UI, by which I mean “Universal Interface”.

In this case “browsing” or “image generation” are basically plug-ins: they are specialized capabilities that, before today, you had to explicitly invoke; going forward they will just work. ChatGPT will seamlessly switch between text generation, image generation, and web browsing, without the user needing to change context. What is necessary for the plug-in/GPT idea to ultimately take root is for the same capabilities to be extended broadly: if my conversation involved math, ChatGPT should know to use Wolfram|Alpha on its own, without me adding the plug-in or going to a specialized GPT.

I can understand why this capability doesn’t yet exist: the obvious technical challenges of properly exposing capabilities and training the model to know when to invoke those capabilities are a textbook example of Professor Clayton Christensen’s theory of integration and modularity, wherein integration works better when a product isn’t good enough; it is only when a product exceeds expectation that there is room for standardization and modularity. To that end, ChatGPT is only now getting the capability to generate an image without the mode being selected for it: I expect the ability to seek out less obvious tools will be fairly difficult.

In fact, it’s possible that the entire plug-in/GPT approach ends up being a dead-end; towards the end of the keynote Romain Huet, the head of developer experience at OpenAI, explicitly demonstrated ChatGPT programming a computer. The scenario was splitting the tab for an Airbnb in Paris:

Code Interpreter is now available today in the API as well. That gives the AI the ability to write and generate code on the file, or even to generate files. So let’s see that in action. If I say here, “Hey, we’ll be 4 friends staying at this Airbnb, what’s my share of it plus my flights?”

Now here what’s happening is that Code Interpreter noticed that it should write some code to answer this query so now it’s computing the number of days in Paris, the number of friends, it’s also doing some exchange rate calculation behind the scene to get this answer for us. Not the most complex math, but you get the picture: imagine you’re building a very complex finance app that’s counting countless numbers, plotting charts, really any tasks you might tackle with code, then Code Interpreter will work great.

Uhm, what tasks do you not tackle with code? To be fair, Huet is referring to fairly simple math-oriented tasks, not the wholesale recreation of every app on the Internet, but it is interesting to consider for which problems ChatGPT will gain the wisdom to choose the right tool, and for which it will simply brute force a new solution; the history of computing would actually give the latter a higher probability: there are a lot of problems that were solved less with clever algorithms and more with the application of Moore’s Law.

Consumers and Hardware

Speaking of the first year of Stratechery, that is when I first wrote about integration and modularization, in What Clayton Christensen Got Wrong; as the title suggests I didn’t think the theory was universal:

Christensen himself laid out his theory’s primary flaw in the first quote excerpted above (from 2006):

You also see it in aircrafts and software, and medical devices, and over and over.

That is the problem: Consumers don’t buy aircraft, software, or medical devices. Businesses do.

Christensen’s theory is based on examples drawn from buying decisions made by businesses, not consumers. The reason this matters is that the theory of low-end disruption presumes:

- Buyers are rational

- Every attribute that matters can be documented and measured

- Modular providers can become “good enough” on all the attributes that matter to the buyers

All three of the assumptions fail in the consumer market, and this, ultimately, is why Christensen’s theory fails as well. Let me take each one in turn:

To summarize the argument, consumers care about things in ways that are inconsistent with whatever price you might attach to their utility, they prioritize ease-of-use, and they care about the quality of the user experience and are thus especially bothered by the seams inherent in a modular solution. This means that integrated solutions win because nothing is ever “good enough”; as I noted in the context of Amazon, Divine Discontent is Disruption’s Antidote:

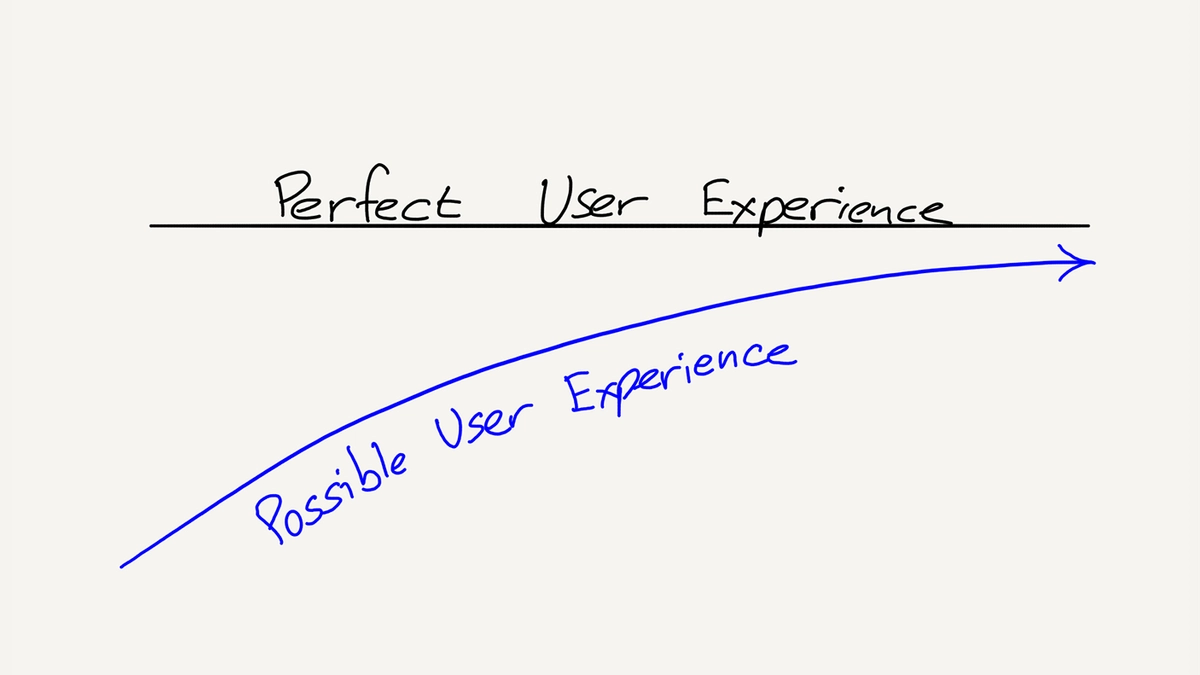

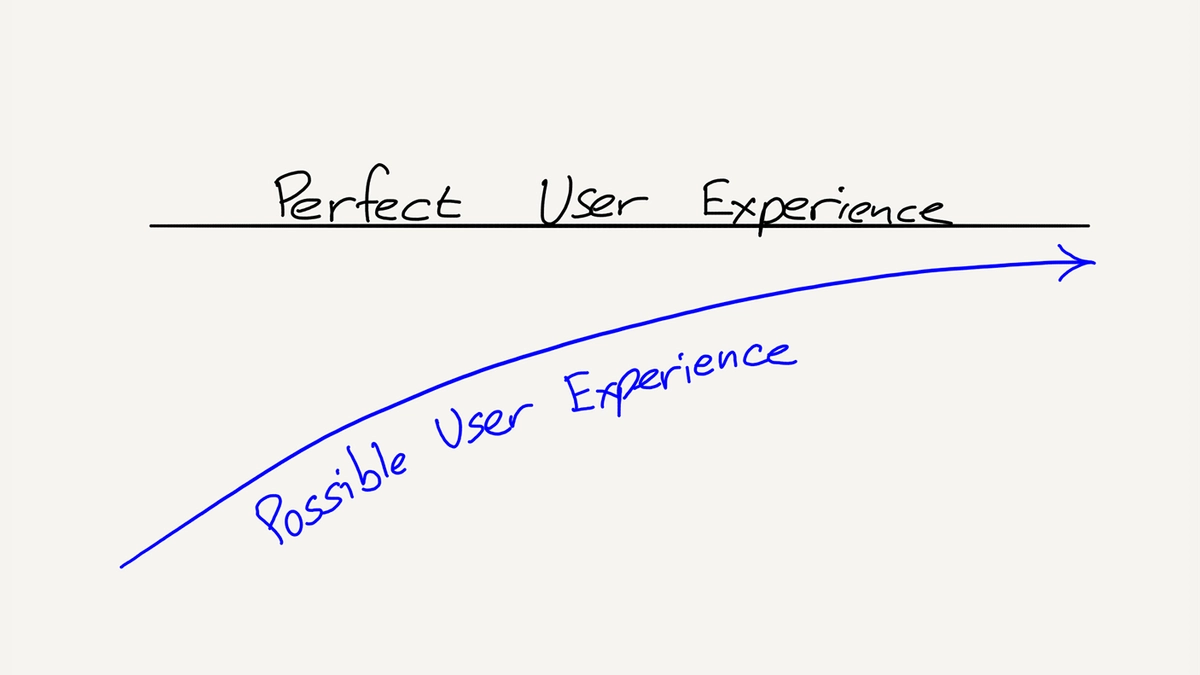

Bezos’s letter, though, reveals another advantage of focusing on customers: it makes it impossible to overshoot. When I wrote that piece five years ago, I was thinking of the opportunity provided by a focus on the user experience as if it were an asymptote: one could get ever closer to the ultimate user experience, but never achieve it:

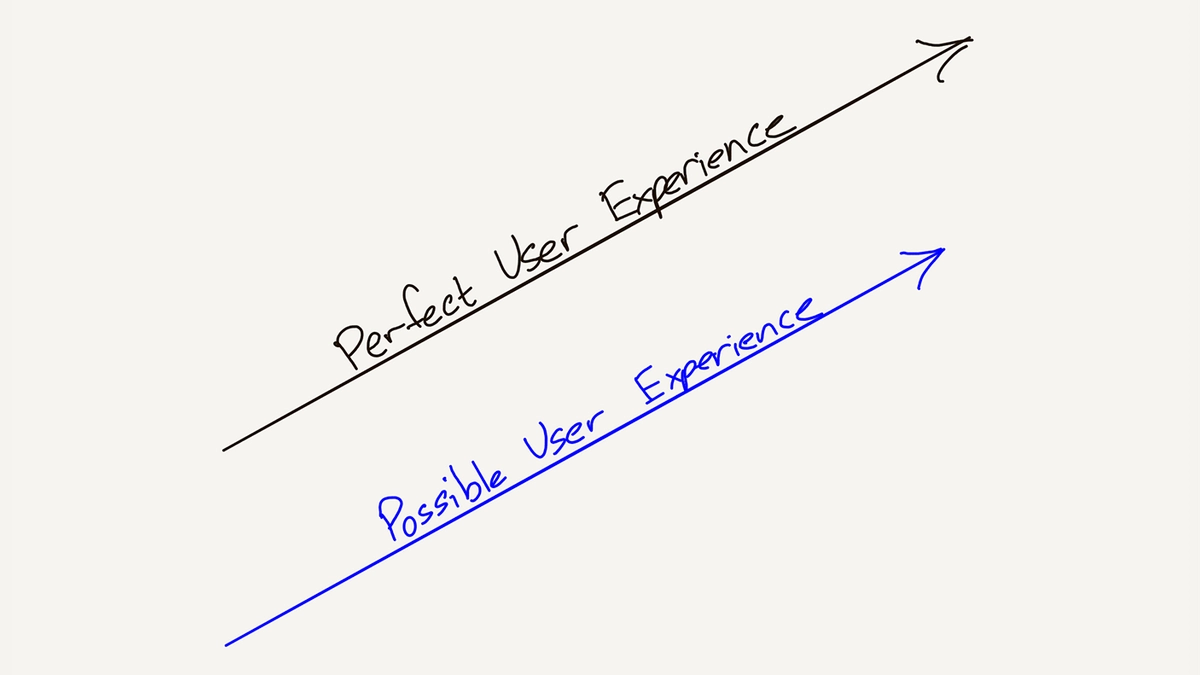

In fact, though, consumer expectations are not static: they are, as Bezos’ memorably states, “divinely discontent”. What is amazing today is table stakes tomorrow, and, perhaps surprisingly, that makes for a tremendous business opportunity: if your company is predicated on delivering the best possible experience for consumers, then your company will never achieve its goal.

In the case of Amazon, that this unattainable and ever-changing objective is embedded in the company’s culture is, in conjunction with the company’s demonstrated ability to spin up new businesses on the profits of established ones, a sort of perpetual motion machine.

I see no reason why both Articles wouldn’t apply to ChatGPT: while I might make the argument that hallucination is, in a certain light, a feature not a bug, the fact of the matter is that a lot of people use ChatGPT for information despite the fact it has a well-documented flaw when it comes to the truth; that flaw is acceptable, because to the customer ease-of-use is worth the loss of accuracy. Or look at plug-ins: the concept as originally implemented has already been abandoned, because the complexity in the user interface was more detrimental than whatever utility might have been possible. It seems likely this pattern will continue: of course customers will say that they want accuracy and 3rd-party tools; their actions will continue to demonstrate that convenience and ease-of-use matter most.

This has two implications. First, while this may have been OpenAI’s first developer conference, I remain unconvinced that OpenAI is going to ever be a true developer-focused company. I think that was Altman’s plan, but reality in the form of ChatGPT intervened: ChatGPT is the most important consumer-facing product since the iPhone, making OpenAI The Accidental Consumer Tech Company. That, by extension, means that integration will continue to matter more than modularization, which is great for Microsoft’s compute stack and maybe less exciting for developers.

Second, there remains one massive patch of friction in using ChatGPT; from AI, Hardware, and Virtual Reality:

AI is truly something new and revolutionary and capable of being something more than just a homework aid, but I don’t think the existing interfaces are the right ones. Talking to ChatGPT is better than typing, but I still have to launch the app and set the mode; vision is an amazing capability, but it requires even more intent and friction to invoke. I could see a scenario where Meta’s AI is inferior technically to OpenAI, but more useful simply because it comes in a better form factor.

After highlighting some news stories about OpenAI potentially partnering with Jony Ive to build hardware, I concluded:

There are obviously many steps before a potential hardware product, including actually agreeing to build one. And there is, of course, the fact that Apple and Google already make devices everyone carries, with the latter in particular investing heavily in its own AI capabilities; betting on the hardware in market winning the hardware opportunity in AI is the safest bet. That may not be a reason for either OpenAI or Meta to abandon their efforts, though: waging a hardware battle against Google and Apple would be difficult, but it might be even worse to be “just an app” if the full realization of AI’s capabilities depend on fully removing human friction from the process.

This is the implication of a Universal Interface, which ChatGPT is striving to be: it also requires universal access, and that will always be a challenge for any company that is “just an app.” Yes, as I noted, the odds seem long, thanks to Apple and Google’s dominance, but I think there is an outside chance that the paradigm-shifting keynote is only just beginning its comeback.